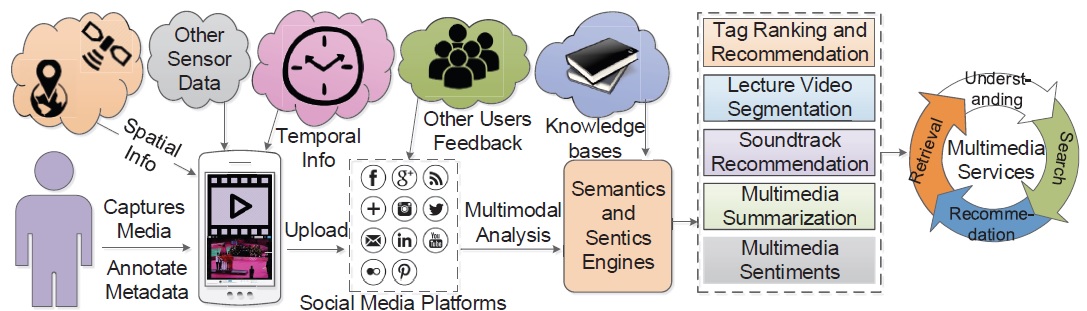

In recent years, a huge amount of user-generated content (UGC) online (e.g., text, images, and videos) is accumulated on the web. UGC available on different platforms helps social media companies in sensing feedback, opinion, and interests of users, and provide services accordingly. However, due to the vast amount of data and inherent noise in social media content, often it is difficult to extract useful information from a single modality. Thus, it is essential to leverage information from multiple modalities to reduce noise from social media content. We leverage both multimedia content and contextual information to provide solutions to several important problems such as fake news detection, trolling detection, hate-speech detection, popularity predictions of photos, soundtrack recommendations for videos, and event summarization. At MIDAS@IIITD, we focus on building efficient fusion mechanisms using deep neural networks techniques which can help social media companies to provide a better service to their users. Our recent papers on multimodal social media analysis are published in top-tier conferences such as ACM Multimedia, WWW, NAACL, etc.